| Scalable Specialization | |

| Sarita Adve's Research Group | |

| University of Illinois at Urbana-Champaign |

|

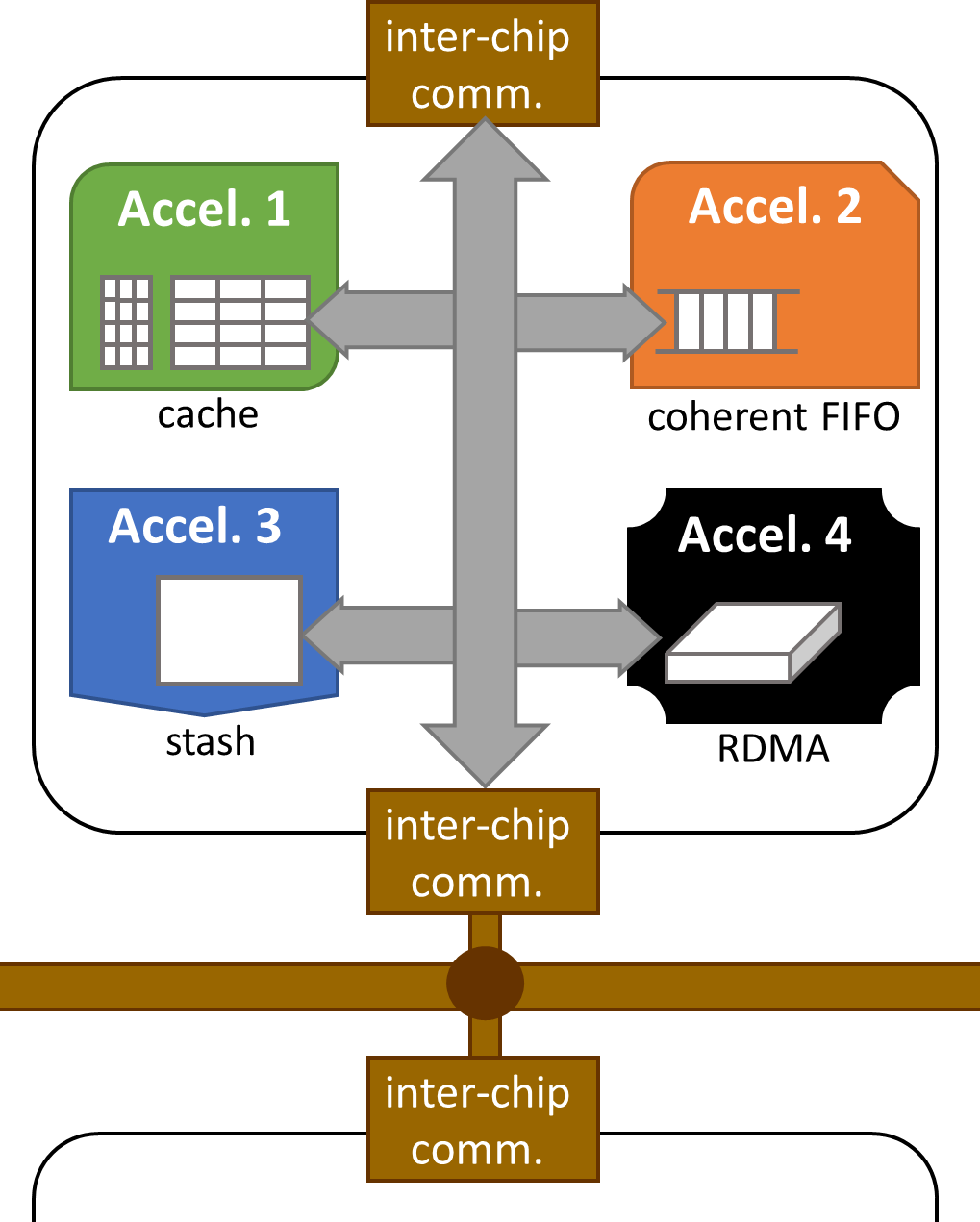

In nearly all compute domains, architectures are increasingly relying on parallelism and hardware specialization to exceed the limits of single core performance. GPUs, FPGAs, and other specialized accelerators are being incorporated into systems ranging from mobile devices to supercomputers to data centers, offering new challenges and opportunities to both hardware and software designers. This project focuses on developing architectures and programming interfaces which can flexibly, efficiently, and simply accommodate these rapidly evolving memory demands. Our cross-layer research includes innovations in coherence protocols and consistency models for heterogeneous systems, novel application-customized coherent storage structures for specialized devices, hardware and software scheduling strategies for heterogeneous workloads, innovations to enable coherent data movement and automatic generation of efficient compute designs in configurable hardware (e.g., FPGAs), a novel hardware-software interface that redefines the notion of an ISA for heterogeneous computing, and innovations in applications to make them amenable to future heterogeneous systems.

Recent results Our recent work has questioned conventional wisdom in industry on coherence protocols and consistency models for heterogeneous systems. Industry efforts to improve efficiency for emerging heterogeneous workloads (especially for GPUs) have tended to add complexity to the programming model through the use of non-coherent scratchpads, scoped synchronization, and relaxed atomics. Each of these methods trades efficiency for reduced programmability and limited usability.

|